What is Okahu¶

Overview¶

AI Observability is proactive monitoring of your AI apps and cloud infra they run on to understand how to make them work better

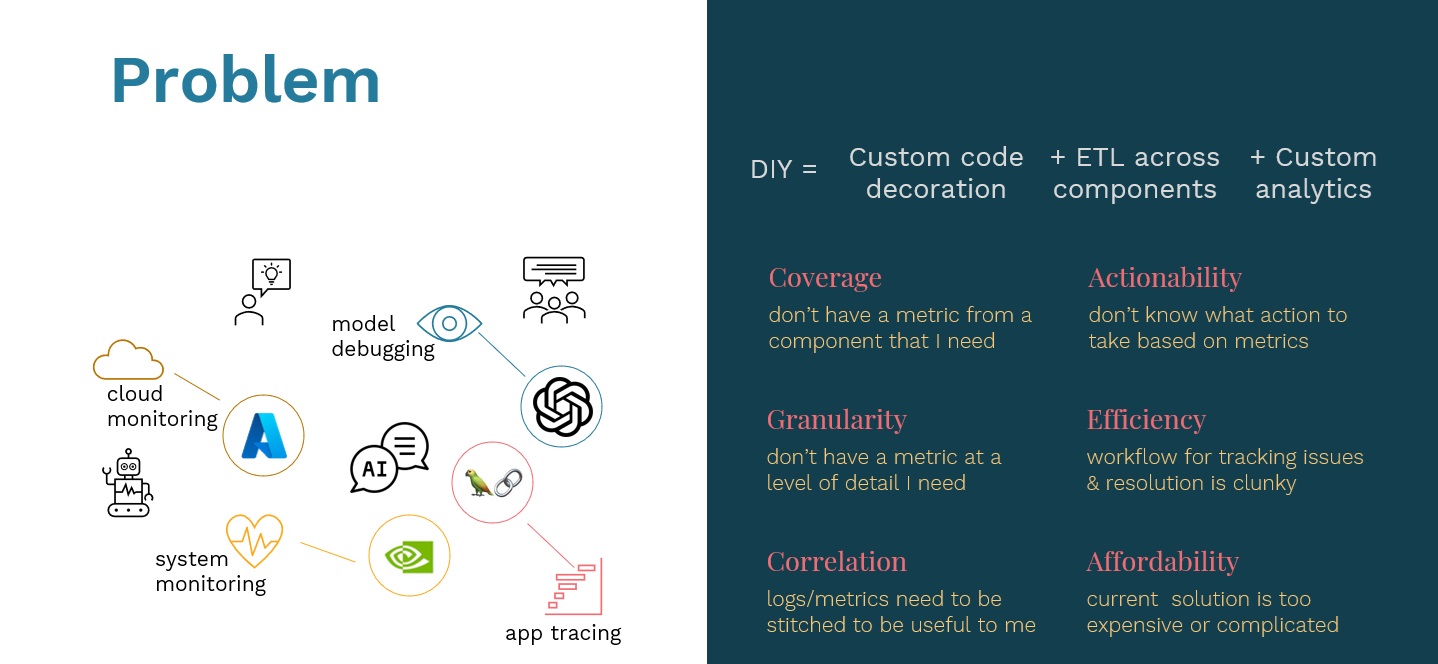

Problem¶

Today's GenAI applications are complex. They comprises of multiple technology components like workflow code, models, model hosting services, vector databases etc. Monitoring each components behavior, tracking the coorelations between components and understanding big picture is very hard.

Solution¶

Okahu captures traces and metrics from all the GenAI application component. We automatically discover the relationships between components and build the big picture. Okahu lets you can define your goals like performance and reliability, track your applications behavior, help you identify and resolve the problems.

Getting Started¶

Explore Okahu playground¶

Login to Okahu portal with your Github or Linkedin account. We'll create a tenant for you with sample data.

You can explore the Okahu playground to see what capabilties Okahu offers

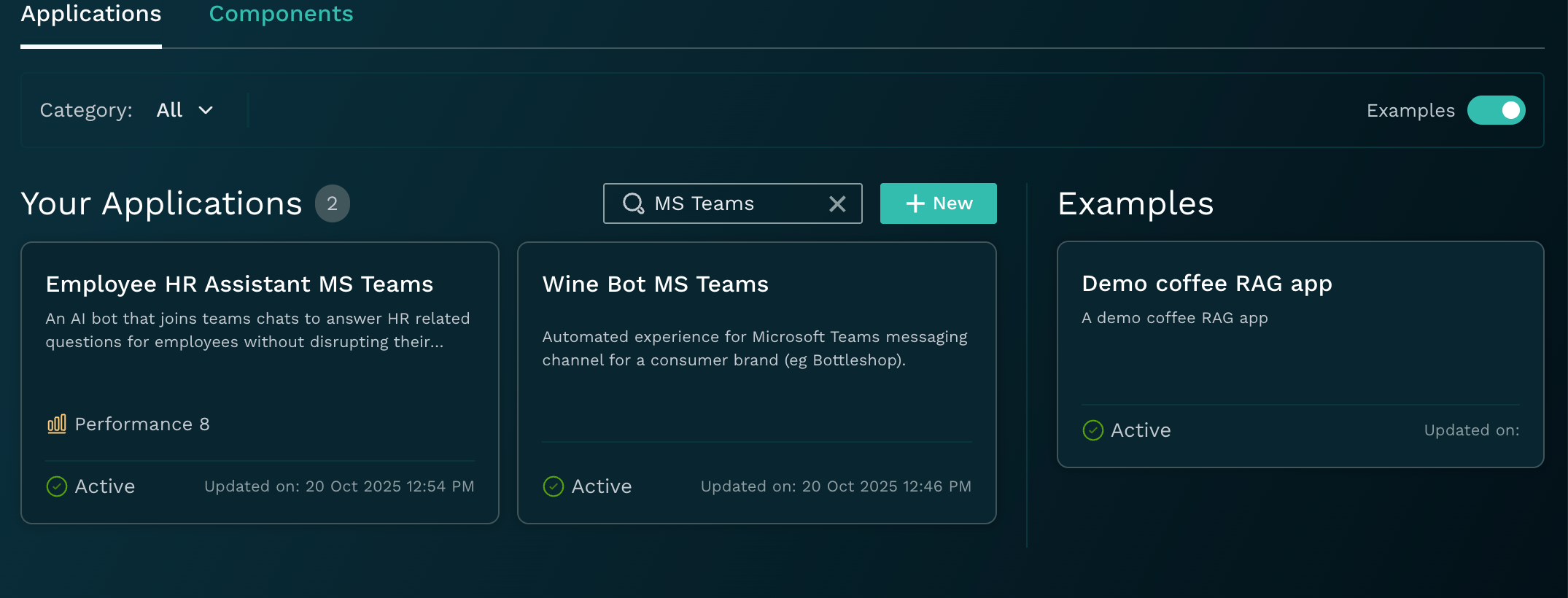

Application insights¶

Okahu uses the traces and metrics data to measure your applications behavior against the your pre-defined goals.

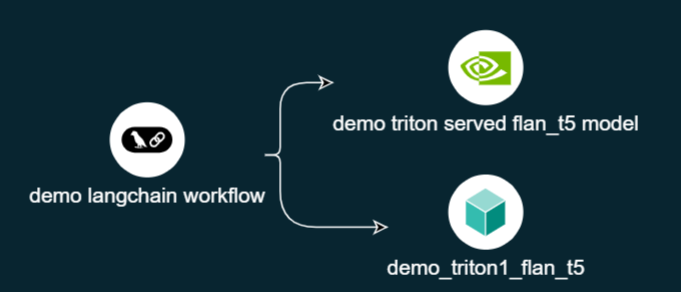

Component map¶

Okahu automatically discovers the GenAI components used by your application and their dependenecies to build the big picture.

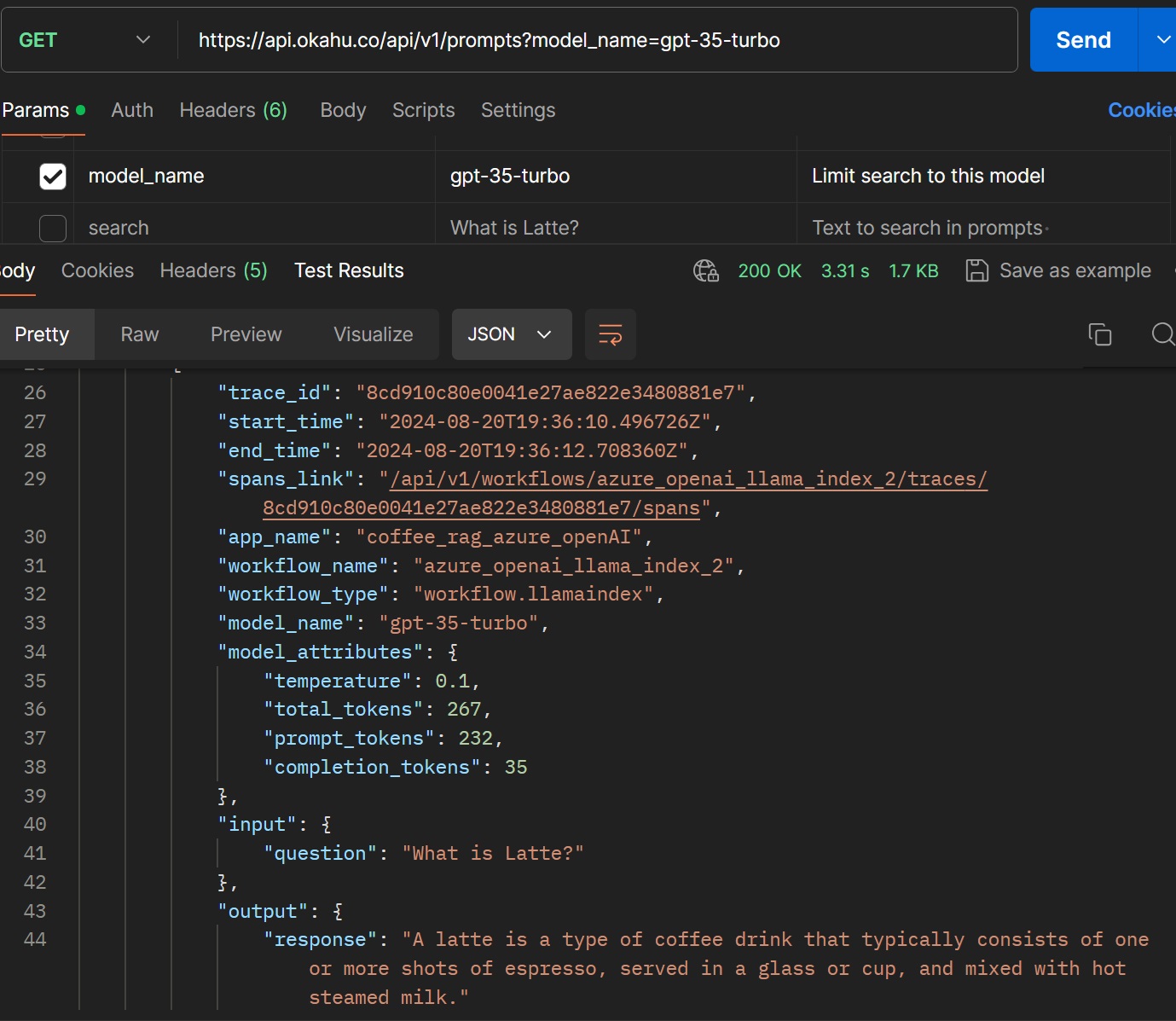

Inference and Prompts¶

Okahu offers full range of RestAPI to programatically use Okahu's capabilities. Clone Okahu's Postman collection to explore the Rest APIs. You'll need the API key that can be retrieved from settings tab in Okahu portal. The example above shows the details of prompt used in a inference request by the demo application.

Infrastructure metrics¶

Okahu collects various metrics like GPU or CPU utilization from infrastructure services like Azure OpenAI or NVDIA Triton. You can view these metrics using Okahu's rest APIs.

Monitor your app in Okahu¶

Once you explore the playground and understand the value Okahu provides, it's time to monitor your application.

1. Local Development¶

Okahu started a community driven open source project called Monocle under Linux Foundation Data & AI. Monocle provides a Python library that enables a GenAI developer to generate traces from their application runs. You and review these traces locally to help build and tune the application. Please refer to Monocle user guide for enabling your application.

Open

We invite you to get involved in the Monocle. Your suggestions and contribution are very welcome!

2. Okahu Cloud¶

Okahu extendeds Monocle's technology to graduate from local development environment to pre-prod and prod where you want to collaborate with other stakeholder like developers, DevOps and leadership. You can now define your application in Okahu and track your goals. Please refer to Okahu application guide to learn how to enable your application.

3. On-prem Okahu¶

Okahu collects the metrics and traces from your infrastructure components like Azure OpenAI, AWS, Sagemaker or NVDIA Triton. You need to deploy and configure the Okahu agent in your on-prem environment where these services are running. For more details, please refer to Okahu's infra agent guide.

Provide feedback¶

We welcome your feedback. Please email us at cx@okahu.ai.